Scrapy en Python (Cómo Funciona Para Desarrolladores)

La efectividad y la eficiencia son fundamentales en el ámbito de la extracción de datos en línea y la generación de documentos. Una integración fluida de herramientas y marcos de trabajo sólidos es necesaria para extraer datos de sitios web y su posterior conversión en documentos de calibre profesional.

Aquí viene Scrapy, un marco de trabajo de scraping web en Python, e IronPDF, dos formidables bibliotecas que trabajan juntas para optimizar la extracción de datos en línea y la creación de PDFs dinámicos.

Los desarrolladores ahora pueden navegar sin esfuerzo por la compleja web y extraer rápidamente datos estructurados con precisión y rapidez gracias a Scrapy en Python, una biblioteca de rastreo y scraping web de primera. Con sus robustos selectores XPath y CSS y su arquitectura asincrónica, es la opción ideal para trabajos de scraping de cualquier complejidad.

Por el contrario, IronPDF es una potente biblioteca .NET que admite la creación, edición y manipulación programática de documentos PDF. IronPDF ofrece a los desarrolladores una solución completa para producir documentos PDF dinámicos y estéticamente atractivos con sus poderosas herramientas de creación de PDF, que incluyen conversión de HTML a PDF y capacidades de edición de PDF.

Esta publicación te llevará a un recorrido por la integración fluida de Scrapy Python con IronPDF y te mostrará cómo este par dinámico transforma la forma en que se realizan el scraping web y la creación de documentos. Mostraremos cómo estas dos bibliotecas trabajan juntas para facilitar trabajos complejos y acelerar flujos de trabajo de desarrollo, desde el scraping de datos de la web con Scrapy hasta la generación dinámica de informes PDF con IronPDF.

Ven a explorar las posibilidades en el scraping web y la generación de documentos mientras usamos IronPDF para aprovechar por completo Scrapy.

Arquitectura asíncrona

La arquitectura asincrónica utilizada por Scrapy permite el procesamiento de varias solicitudes a la vez. Esto conduce a una mayor eficiencia y velocidades de scraping web más rápidas, particularmente al trabajar con sitios web complicados o grandes cantidades de datos.

Gestión de rastreo

Scrapy tiene características de gestión de procesos de rastreo de Scrapy sólidas, como el filtrado automático de URL, la programación de solicitudes configurable y el manejo integrado de directivas robots.txt. El comportamiento del rastreo puede ser ajustado por los desarrolladores para satisfacer sus propias necesidades y garantizar la adherencia a las pautas del sitio web.

Selectores para XPath y CSS

Scrapy permite a los usuarios navegar y seleccionar elementos dentro de páginas HTML utilizando selectores para XPath y CSS. Esta adaptabilidad hace que la extracción de datos sea más precisa y confiable al permitir que los desarrolladores apunten específicamente a determinados elementos o patrones en una página web.

Línea de productos

Los desarrolladores pueden especificar componentes reutilizables para procesar datos raspados antes de exportarlos o almacenarlos utilizando la canalización de ítems de Scrapy. Al realizar operaciones como limpieza, validación, transformación y eliminación de duplicados, los desarrolladores pueden garantizar la precisión y consistencia de los datos extraídos.

Medios incorporados

Una serie de componentes de middleware que vienen pre-instalados en Scrapy ofrecen características como manejo automático de cookies, limitación de solicitudes, rotación de user-agent y rotación de proxy. Estos elementos de middleware son fácilmente configurables y personalizables para mejorar la eficiencia del scraping y abordar problemas comunes.

Arquitectura extensible

Al crear middleware personalizados, extensiones y canalizaciones, los desarrolladores pueden personalizar y expandir aún más las capacidades de Scrapy gracias a su arquitectura modular y extensible. Debido a su adaptabilidad, los desarrolladores pueden incluir fácilmente Scrapy en sus procesos actuales y modificarlo para satisfacer sus necesidades específicas de scraping.

Crear y configurar Scrapy en Python

Instalar Scrapy

Instala Scrapy usando pip ejecutando el siguiente comando:

pip install scrapypip install scrapyDefina una araña

Para definir tu spider, crea un nuevo archivo Python (como example.py) en el directorio spiders/. Aquí se proporciona una ilustración de un spider básico que extrae desde una URL:

import scrapy

class QuotesSpider(scrapy.Spider):

# Name of the spider

name = 'quotes'

# Starting URL

start_urls = ['http://quotes.toscrape.com']

def parse(self, response):

# Iterate through each quote block in the response

for quote in response.css('div.quote'):

# Extract and yield quote details

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

# Identify and follow the next page link

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

yield response.follow(next_page, self.parse)import scrapy

class QuotesSpider(scrapy.Spider):

# Name of the spider

name = 'quotes'

# Starting URL

start_urls = ['http://quotes.toscrape.com']

def parse(self, response):

# Iterate through each quote block in the response

for quote in response.css('div.quote'):

# Extract and yield quote details

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

# Identify and follow the next page link

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

yield response.follow(next_page, self.parse)Configurar parámetros

Para configurar los parámetros del proyecto Scrapy como user-agent, retrasos de descarga y canalizaciones, edita el archivo settings.py. Aquí tienes una ilustración de cómo cambiar el user-agent y hacer que las canalizaciones sean funcionales:

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Set user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'

# Configure pipelines

ITEM_PIPELINES = {

'myproject.pipelines.MyPipeline': 300,

}# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Set user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'

# Configure pipelines

ITEM_PIPELINES = {

'myproject.pipelines.MyPipeline': 300,

}Para empezar

Comenzar con Scrapy e IronPDF requiere combinar las robustas habilidades de scraping web de Scrapy con las dinámicas características de producción de PDF de IronPDF. Te guiaré a través de los pasos para configurar un proyecto Scrapy a continuación, para que puedas extraer datos de sitios web y usar IronPDF para crear un documento PDF que contenga los datos.

¿Qué es IronPDF?

IronPDF es una poderosa biblioteca .NET para crear, editar y modificar documentos PDF programáticamente en C#, VB.NET y otros lenguajes .NET. Dado que ofrece a los desarrolladores un amplio conjunto de funciones para crear dinámicamente PDFs de alta calidad, es una opción popular para muchos programas.

Características de IronPDF

Generación de PDF: Usando IronPDF, los programadores pueden crear nuevos documentos PDF o convertir elementos HTML existentes como etiquetas, texto, imágenes y otros formatos de archivos en PDFs. Esta característica es muy útil para crear informes, facturas, recibos y otros documentos de manera dinámica.

Conversión de HTML a PDF: IronPDF facilita que los desarrolladores transformen documentos HTML, incluidos estilos de JavaScript y CSS, en archivos PDF. Esto permite la creación de PDFs desde páginas web, contenido generado dinámicamente y plantillas HTML.

Modificación y Edición de Documentos PDF: IronPDF proporciona un conjunto completo de funcionalidades para modificar y alterar documentos PDF preexistentes. Los desarrolladores pueden fusionar varios archivos PDF, separarlos en documentos separados, eliminar páginas y agregar marcadores, anotaciones y marcas de agua, entre otras características, para personalizar PDFs según sus requisitos.

Cómo instalar IronPDF

Después de asegurarte de que Python esté instalado en tu computadora, usa pip para instalar IronPDF.

pip install ironpdf

Proyecto Scrapy con IronPDF

Para definir tu spider, crea un nuevo archivo Python (como example.py) en el directorio del spider de tu proyecto Scrapy (myproject/myproject/spiders). Un ejemplo de código de un spider básico que extrae citas de una URL:

import scrapy

from IronPdf import *

class QuotesSpider(scrapy.Spider):

name = 'quotes'

# Web page link

start_urls = ['http://quotes.toscrape.com']

def parse(self, response):

quotes = []

for quote in response.css('div.quote'):

title = quote.css('span.text::text').get()

content = quote.css('span small.author::text').get()

quotes.append((title, content)) # Append quote to list

# Generate PDF document using IronPDF

renderer = ChromePdfRenderer()

pdf = renderer.RenderHtmlAsPdf(self.get_pdf_content(quotes))

pdf.SaveAs("quotes.pdf")

def get_pdf_content(self, quotes):

# Generate HTML content for PDF using extracted quotes

html_content = "<html><head><title>Quotes</title></head><body>"

for title, content in quotes:

html_content += f"<h2>{title}</h2><p>Author: {content}</p>"

html_content += "</body></html>"

return html_contentimport scrapy

from IronPdf import *

class QuotesSpider(scrapy.Spider):

name = 'quotes'

# Web page link

start_urls = ['http://quotes.toscrape.com']

def parse(self, response):

quotes = []

for quote in response.css('div.quote'):

title = quote.css('span.text::text').get()

content = quote.css('span small.author::text').get()

quotes.append((title, content)) # Append quote to list

# Generate PDF document using IronPDF

renderer = ChromePdfRenderer()

pdf = renderer.RenderHtmlAsPdf(self.get_pdf_content(quotes))

pdf.SaveAs("quotes.pdf")

def get_pdf_content(self, quotes):

# Generate HTML content for PDF using extracted quotes

html_content = "<html><head><title>Quotes</title></head><body>"

for title, content in quotes:

html_content += f"<h2>{title}</h2><p>Author: {content}</p>"

html_content += "</body></html>"

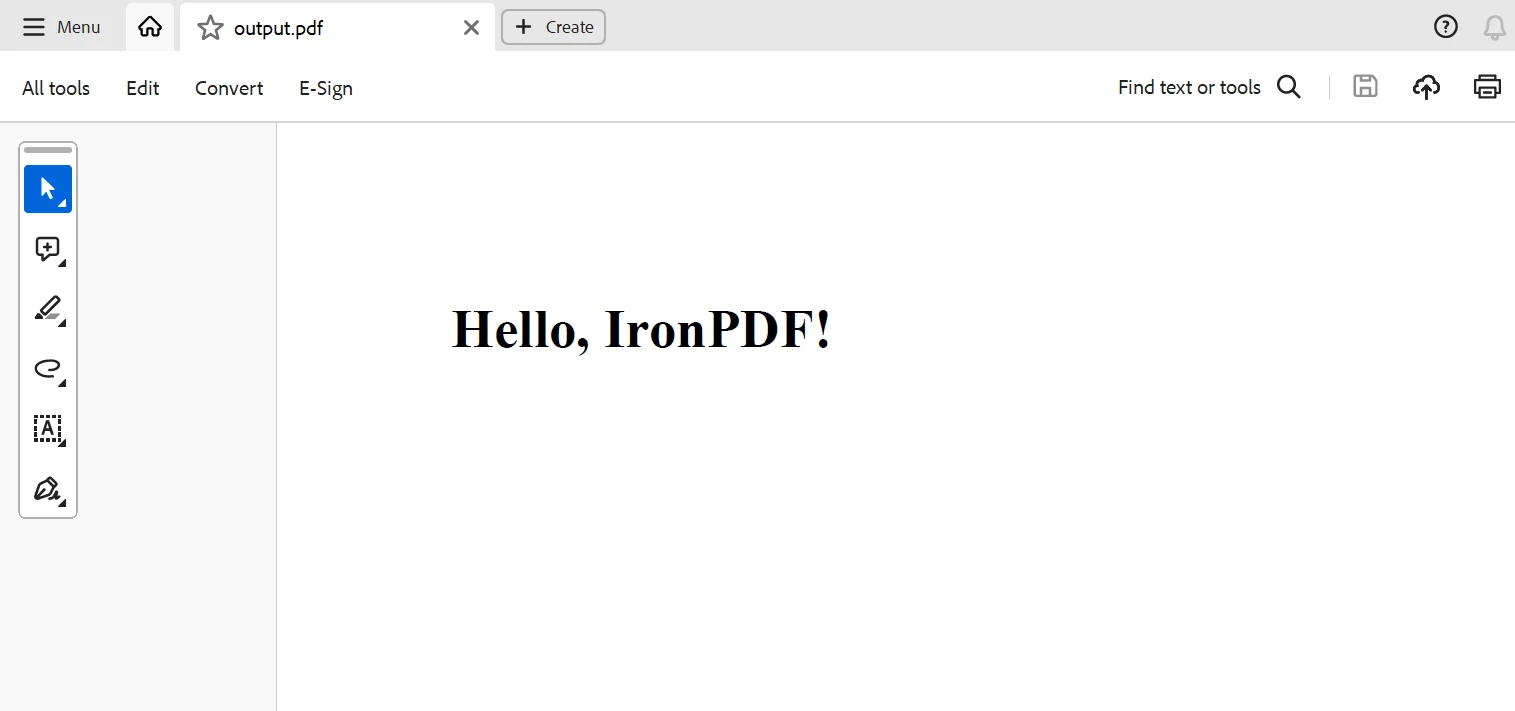

return html_contentEn el ejemplo de código anterior de un proyecto Scrapy con IronPDF, se está utilizando IronPDF para crear un documento PDF utilizando los datos que han sido extraídos usando Scrapy.

Aquí, el método parse del spider reúne citas de la página web y utiliza la función get_pdf_content para crear el contenido HTML para el archivo PDF. Este material HTML es posteriormente renderizado como un documento PDF usando IronPDF y guardado como quotes.pdf.

Conclusión

En resumen, la combinación de Scrapy e IronPDF ofrece a los desarrolladores una sólida opción para automatizar actividades de scraping web y producir documentos PDF al instante. Las características flexibles de producción de PDF de IronPDF junto con las poderosas capacidades de rastreo y scraping web de Scrapy proporcionan un proceso fluido para recolectar datos estructurados de cualquier página web y convertir los datos extraídos en informes PDF, facturas o documentos de calidad profesional.

A través del uso de Scrapy Spider Python, los desarrolladores pueden navegar eficazmente las complejidades de internet, recuperar información de muchas fuentes y organizarla de manera sistemática. El marco de trabajo flexible de Scrapy, su arquitectura asincrónica y soporte para selectores XPath y CSS le brindan la flexibilidad y escalabilidad necesarias para gestionar una variedad de actividades de scraping web.

Se incluye una licencia de por vida con IronPDF, que tiene un precio bastante razonable cuando se compra en un paquete. Se proporciona un excelente valor con el paquete, que sólo cuesta $799 (una compra única para varios sistemas). Aquellos con licencias tienen acceso 24/7 a soporte técnico en línea. Para más información sobre la tarifa, por favor visita el sitio web. Visita esta página para conocer más sobre los productos de Iron Software.

Preguntas Frecuentes

¿Cómo puedo integrar Scrapy con una herramienta de generación de PDF?

Puedes integrar Scrapy con una herramienta de generación de PDF como IronPDF usando primero Scrapy para extraer datos estructurados de sitios web, y luego empleando IronPDF para convertir esos datos en documentos PDF dinámicos.

¿Cuál es la mejor manera de extraer datos y convertirlos en un PDF?

La mejor manera de extraer datos y convertirlos en un PDF es utilizando Scrapy para extraer los datos eficientemente y IronPDF para generar un PDF de alta calidad a partir del contenido extraído.

¿Cómo puedo convertir HTML a PDF en Python?

Aunque IronPDF es una biblioteca de .NET, puedes usarla con Python a través de soluciones de interoperabilidad como Python.NET para convertir HTML a PDF usando los métodos de conversión de IronPDF.

¿Cuáles son las ventajas de usar Scrapy para la extracción de datos web?

Scrapy ofrece ventajas como procesamiento asincrónico, selectores robustos de XPath y CSS, y middleware personalizables, que agilizan el proceso de extracción de datos de sitios web complejos.

¿Puedo automatizar la creación de PDFs a partir de datos web?

Sí, puedes automatizar la creación de PDFs a partir de datos web integrando Scrapy para la extracción de datos e IronPDF para generar PDFs, permitiendo un flujo de trabajo sin interrupciones desde la extracción hasta la creación de documentos.

¿Cuál es el papel del middleware en Scrapy?

El middleware en Scrapy te permite controlar y personalizar el procesamiento de solicitudes y respuestas, habilitando características como el filtrado automático de URLs y la rotación de agentes de usuario para mejorar la eficiencia de la extracción.

¿Cómo defines un spider en Scrapy?

Para definir un spider en Scrapy, crea un nuevo archivo Python en el directorio spiders de tu proyecto e implementa una clase extendiendo scrapy.Spider con métodos como parse para manejar la extracción de datos.

¿Qué hace que IronPDF sea una opción adecuada para la generación de PDFs?

IronPDF es una opción adecuada para la generación de PDFs porque ofrece funciones completas para la conversión de HTML a PDF, creación dinámica de PDFs, edición y manipulación, lo que lo hace versátil para diversas necesidades de generación de documentos.

¿Cómo puedo mejorar la extracción de datos web y la creación de PDFs?

Mejora la extracción de datos web y la creación de PDFs utilizando Scrapy para una efectiva extracción de datos e IronPDF para convertir los datos extraídos en documentos PDF profesionalmente formateados.