AI-Powered PDF Processing in C#: Summarize, Extract, and Analyze Documents with IronPDF

PDFs have become the universal language of business documentation, legal contracts, financial reports, and academic research. Yet for decades, the wealth of information locked inside these documents remained largely inaccessible to automated systems. Traditional PDF processing could extract raw text, but understanding what that text meant—identifying key clauses in contracts, extracting structured financial data, or answering complex questions about document content—required painstaking manual review.

The AI revolution has changed everything. Modern large language models (LLMs) can now read, understand, and analyze PDFs with human-like comprehension. Anthropic's newly released Claude Opus 4.6 (February 2026) introduces agent teams and a 1M-token context window for processing entire document libraries in a single session. Combined with GPT-5's established 272,000-token context window and unified reasoning capabilities, these models can identify contract obligations, summarize PDF documents into executive briefings, extract data into JSON format, and answer nuanced questions about document content with unprecedented accuracy.

IronPDF rises to this challenge by providing seamless integration between C# applications and AI services like OpenAI and Azure OpenAI. Through the IronPdf.Extensions.AI package, developers can add document summarization, intelligent querying, and structured data extraction to their .NET applications with just a few lines of code. Built on Microsoft Semantic Kernel, this integration handles the complexity of preparing PDFs for AI consumption, managing context windows, and orchestrating multi-step AI workflows—letting you focus on building powerful document intelligence features rather than wrestling with infrastructure.

In this tutorial, we'll explore how to harness AI for PDF processing in C#. You'll learn to summarize documents, extract structured data, build question-answering systems, implement RAG (Retrieval-Augmented Generation) patterns for long documents, and process document libraries at scale. Whether you're building legal discovery tools, financial analysis systems, or research automation platforms, you'll discover how IronPDF and the latest AI models can transform your document workflows.

TL;DR: Quickstart Guide

Transform any PDF into an actionable summary after initializing the AI engine. IronPDF's AI extension connects seamlessly to Azure OpenAI through Microsoft Semantic Kernel, allowing you to extract key insights from documents.

Get started making PDFs with NuGet now:

Get started making PDFs with NuGet now:

Install IronPDF with NuGet Package Manager

Copy and run this code snippet.

await IronPdf.AI.PdfAIEngine.Summarize("contract.pdf", "summary.txt", azureEndpoint, azureApiKey);Deploy to test on your live environment

Before using AI features, you must initialize the Semantic Kernel with your Azure OpenAI credentials (see Initializing the AI Engine below). After you've purchased or signed up for a 30-day trial of IronPDF, add your license key at the start of your application.

IronPdf.License.LicenseKey = "KEY";IronPdf.License.LicenseKey = "KEY";Imports IronPdf

IronPdf.License.LicenseKey = "KEY"TL;DR: Quickstart Guide

- The AI + PDF Opportunity

- IronPDF's Built-in AI Integration

- Document Summarization

- Intelligent Data Extraction

- Question-Answering Over Documents

- Batch AI Processing

- Real-World Use Cases

- Troubleshooting & Technical Support

The AI + PDF Opportunity

Why PDFs Are the Biggest Untapped Data Source

PDFs represent one of the largest repositories of structured business knowledge in the modern enterprise. Professional documents—contracts, financial statements, compliance reports, legal briefs, and research papers—are predominantly stored in PDF format. These documents contain critical business intelligence: contract terms that define obligations and liabilities, financial metrics that drive investment decisions, regulatory requirements that ensure compliance, and research findings that guide strategy.

Yet traditional approaches to PDF processing have been severely limited. Basic text extraction tools can pull raw characters from a page, but they lose crucial context: table structures collapse into jumbled text, multi-column layouts become nonsensical, and the semantic relationships between sections disappear.

The breakthrough comes from AI's ability to understand context and structure. Modern LLMs don't just see words—they comprehend document organization, recognize patterns like contract clauses or financial tables, and can extract meaning even from complex layouts. GPT-5's unified reasoning system with its real-time router and Claude Sonnet 4.5's enhanced agentic capabilities both demonstrate significantly reduced hallucination rates compared to earlier models, making them reliable for professional document analysis.

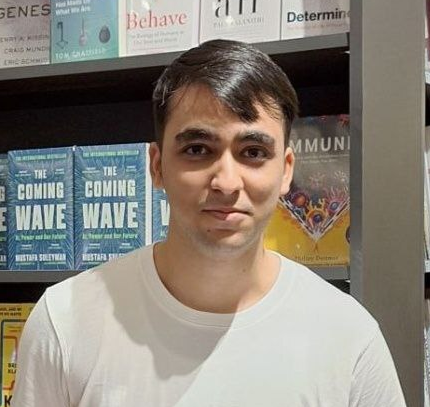

How LLMs Understand Document Structure

Large language models bring sophisticated natural language processing capabilities to PDF analysis. GPT-5's hybrid architecture features multiple sub-models (main, mini, thinking, nano) with a real-time router that dynamically selects the optimal variant based on task complexity—simple questions route to faster models while complex reasoning tasks engage the full model.

Claude Opus 4.6 excels particularly at long-running agentic tasks, with agent teams that coordinate directly on segmented jobs and a 1M-token context window that can process entire document libraries without chunking.

This contextual understanding enables LLMs to perform tasks that require genuine comprehension. When analyzing a contract, an LLM can identify not just clauses containing the word "termination," but understand the specific conditions under which termination is permitted, the notice requirements involved, and the liabilities that result. The technical foundation enabling this capability is the transformer architecture that powers modern LLMs, with GPT-5's context window supporting up to 272,000 input tokens and Claude Sonnet 4.5's 200K token window providing comprehensive document coverage.

IronPDF's Built-in AI Integration

Installing IronPDF and AI Extensions

Getting started with AI-powered PDF processing requires the core IronPDF library, the AI extensions package, and Microsoft Semantic Kernel dependencies.

Install IronPDF with NuGet Package Manager:

PM > Install-Package IronPdf

PM > Install-Package IronPdf.Extensions.AI

PM > Install-Package Microsoft.SemanticKernel

PM > Install-Package Microsoft.SemanticKernel.Plugins.MemoryPM > Install-Package IronPdf

PM > Install-Package IronPdf.Extensions.AI

PM > Install-Package Microsoft.SemanticKernel

PM > Install-Package Microsoft.SemanticKernel.Plugins.MemoryThese packages work together to provide a complete solution. IronPDF handles all PDF-related operations—text extraction, page rendering, format conversion—while the AI extension manages the integration with language models through Microsoft Semantic Kernel.

<NoWarn>$(NoWarn);SKEXP0001;SKEXP0010;SKEXP0050</NoWarn> to your .csproj PropertyGroup to suppress compiler warnings.Configuring Your OpenAI/Azure API Key

Before you can leverage AI features, you need to configure access to an AI service provider. IronPDF's AI extension supports both OpenAI and Azure OpenAI. Azure OpenAI is often preferred for enterprise applications because it provides enhanced security features, compliance certifications, and the ability to keep data within specific geographic regions.

To configure Azure OpenAI, you'll need your Azure endpoint URL, API key, and deployment names for both chat and embedding models from the Azure portal.

Initializing the AI Engine

IronPDF's AI extension uses Microsoft Semantic Kernel under the hood. Before using any AI features, you must initialize the kernel with your Azure OpenAI credentials and configure the memory store for document processing.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/configure-azure-credentials.cs// Initialize IronPDF AI with Azure OpenAI credentials

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel with Azure OpenAI

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

// Create memory store for document embeddings

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

// Initialize IronPDF AI

IronDocumentAI.Initialize(kernel, memory);

Console.WriteLine("IronPDF AI initialized successfully with Azure OpenAI");

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel with Azure OpenAI

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

' Create memory store for document embeddings

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

' Initialize IronPDF AI

IronDocumentAI.Initialize(kernel, memory)

Console.WriteLine("IronPDF AI initialized successfully with Azure OpenAI")The initialization creates two key components:

- Kernel: Handles chat completions and text embedding generation through Azure OpenAI

- Memory: Stores document embeddings for semantic search and retrieval operations

Once initialized with IronDocumentAI.Initialize(), you can use AI features throughout your application. For production applications, storing credentials in environment variables or Azure Key Vault is strongly recommended.

How IronPDF Prepares PDFs for AI Context

One of the most challenging aspects of AI-powered PDF processing is preparing documents for consumption by language models. While GPT-5 supports up to 272,000 input tokens and Claude Opus 4.6 now offers a 1M token context window, a single legal contract or financial report can still easily exceed older models' limits.

IronPDF's AI extension handles this complexity through intelligent document preparation. When you call an AI method, IronPDF first extracts text from the PDF while preserving structural information—identifying paragraphs, preserving table structures, and maintaining the relationships between sections.

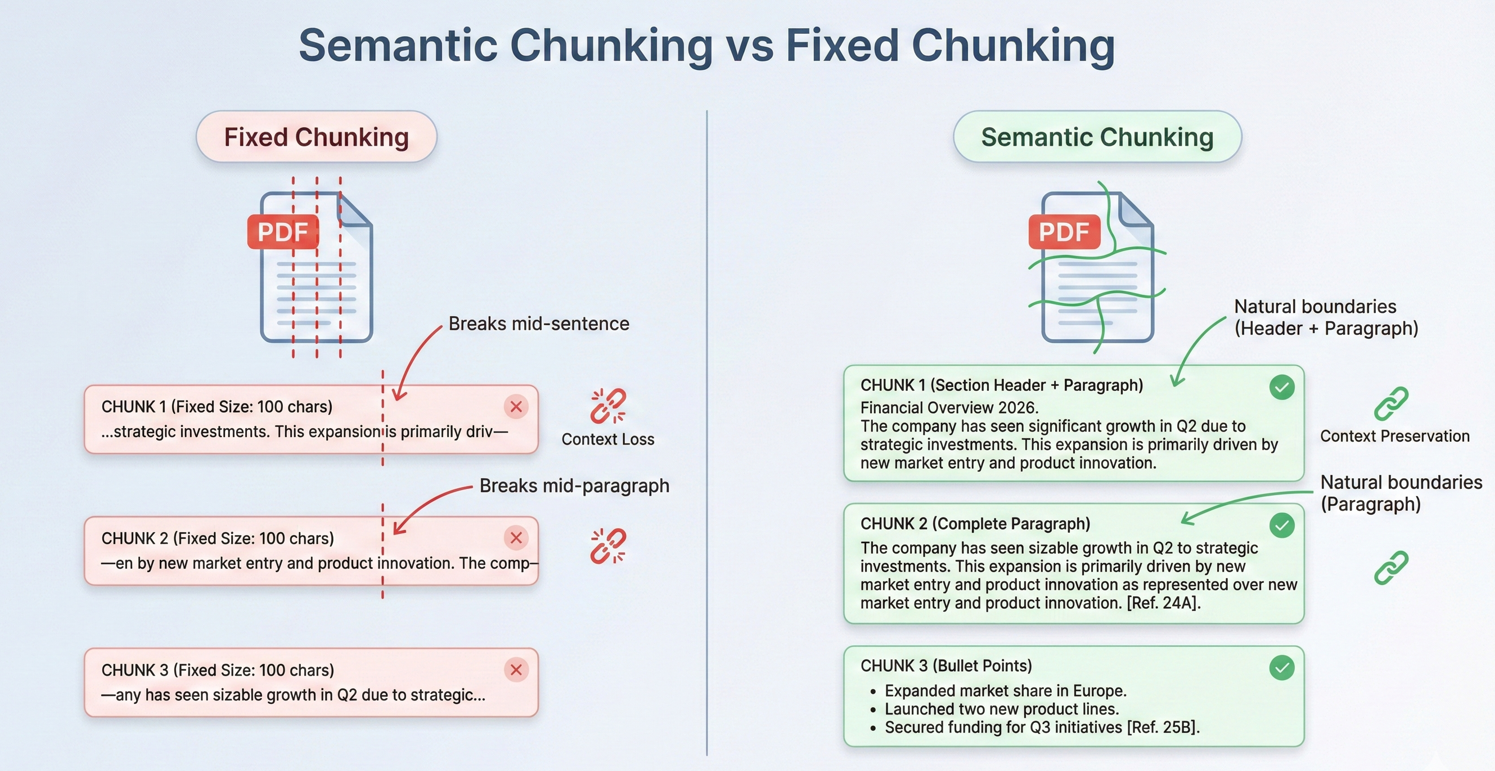

For documents that exceed context limits, IronPDF implements strategic chunking at semantic breakpoints—natural divisions in document structure like section headers, page breaks, or paragraph boundaries.

Document Summarization

Single Document Summaries

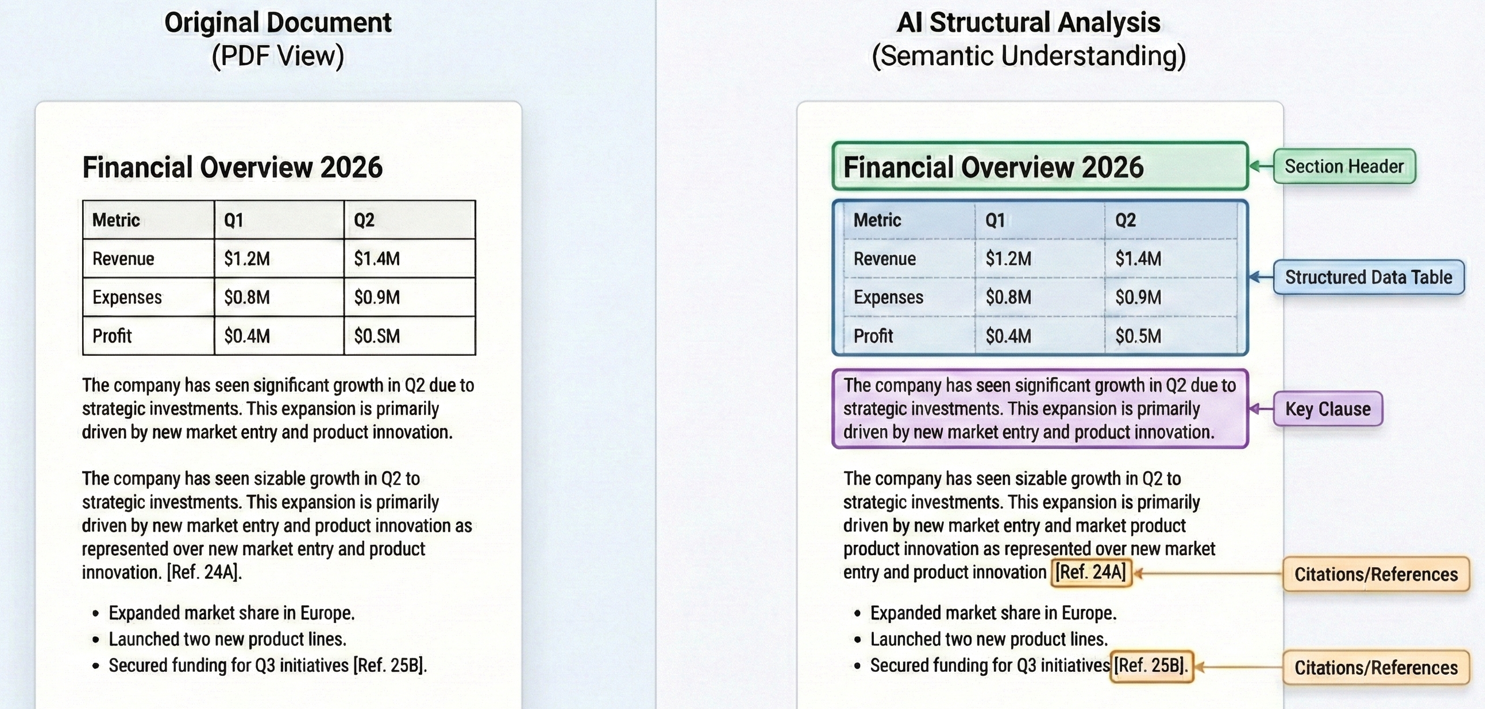

Document summarization delivers immediate value by condensing lengthy documents into digestible insights. The Summarize method handles the entire workflow: extracting text, preparing it for AI consumption, requesting a summary from the language model, and saving the results.

Input

The code loads a PDF using PdfDocument.FromFile() and calls pdf.Summarize() to generate a concise summary, then saves the result to a text file.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/single-document-summary.cs// Summarize a PDF document using IronPDF AI

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

// Load and summarize PDF

var pdf = PdfDocument.FromFile("sample-report.pdf");

string summary = await pdf.Summarize();

Console.WriteLine("Document Summary:");

Console.WriteLine(summary);

File.WriteAllText("report-summary.txt", summary);

Console.WriteLine("\nSummary saved to report-summary.txt");

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

' Load and summarize PDF

Dim pdf = PdfDocument.FromFile("sample-report.pdf")

Dim summary As String = Await pdf.Summarize()

Console.WriteLine("Document Summary:")

Console.WriteLine(summary)

File.WriteAllText("report-summary.txt", summary)

Console.WriteLine(vbCrLf & "Summary saved to report-summary.txt")Console Output

The summarization process uses sophisticated prompting to ensure high-quality results. Both GPT-5 and Claude Sonnet 4.5 in 2026 feature significantly improved instruction following capabilities, ensuring summaries capture essential information while remaining concise and readable.

For a more detailed explanation of document summarization techniques and advanced options, please refer to our how-to guide.

Multi-Document Synthesis

Many real-world scenarios require synthesizing information across multiple documents. A legal team might need to identify common clauses across a portfolio of contracts, or a financial analyst might want to compare metrics across quarterly reports.

The approach to multi-document synthesis involves processing each document individually to extract key information, then aggregating these insights for final synthesis.

This example iterates through multiple PDFs, calling pdf.Summarize() on each, then uses pdf.Query() with the combined summaries to generate a unified synthesis.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/multi-document-synthesis.cs// Synthesize insights across multiple related documents (e.g., quarterly reports into annual summary)

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

// Define documents to synthesize

string[] documentPaths = {

"Q1-report.pdf",

"Q2-report.pdf",

"Q3-report.pdf",

"Q4-report.pdf"

};

var documentSummaries = new List<string>();

// Summarize each document

foreach (string path in documentPaths)

{

var pdf = PdfDocument.FromFile(path);

string summary = await pdf.Summarize();

documentSummaries.Add($"=== {Path.GetFileName(path)} ===\n{summary}");

Console.WriteLine($"Processed: {path}");

}

// Combine and synthesize across all documents

string combinedSummaries = string.Join("\n\n", documentSummaries);

var synthesisDoc = PdfDocument.FromFile(documentPaths[0]);

string synthesisQuery = @"Based on the quarterly summaries below, provide an annual synthesis:

1. Overall trends across quarters

2. Key achievements and challenges

3. Year-over-year patterns

Summaries:

" + combinedSummaries;

string synthesis = await synthesisDoc.Query(synthesisQuery);

Console.WriteLine("\n=== Annual Synthesis ===");

Console.WriteLine(synthesis);

File.WriteAllText("annual-synthesis.txt", synthesis);

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.IO

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

' Define documents to synthesize

Dim documentPaths As String() = {

"Q1-report.pdf",

"Q2-report.pdf",

"Q3-report.pdf",

"Q4-report.pdf"

}

Dim documentSummaries = New List(Of String)()

' Summarize each document

For Each path As String In documentPaths

Dim pdf = PdfDocument.FromFile(path)

Dim summary As String = Await pdf.Summarize()

documentSummaries.Add($"=== {Path.GetFileName(path)} ==={vbCrLf}{summary}")

Console.WriteLine($"Processed: {path}")

Next

' Combine and synthesize across all documents

Dim combinedSummaries As String = String.Join(vbCrLf & vbCrLf, documentSummaries)

Dim synthesisDoc = PdfDocument.FromFile(documentPaths(0))

Dim synthesisQuery As String = "Based on the quarterly summaries below, provide an annual synthesis:" & vbCrLf &

"1. Overall trends across quarters" & vbCrLf &

"2. Key achievements and challenges" & vbCrLf &

"3. Year-over-year patterns" & vbCrLf & vbCrLf &

"Summaries:" & vbCrLf & combinedSummaries

Dim synthesis As String = Await synthesisDoc.Query(synthesisQuery)

Console.WriteLine(vbCrLf & "=== Annual Synthesis ===")

Console.WriteLine(synthesis)

File.WriteAllText("annual-synthesis.txt", synthesis)This pattern scales effectively to large document sets. By processing documents in parallel and managing intermediate results, you can analyze hundreds or thousands of documents while maintaining coherent synthesis.

Executive Summary Generation

Executive summaries require a different approach than standard summarization. Rather than simply condensing content, an executive summary must identify the most business-critical information, highlight key decisions or recommendations, and present findings in a format suitable for leadership review.

The code uses pdf.Query() with a structured prompt requesting key decisions, critical findings, financial impact, and risk assessment in business language.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/executive-summary.cs// Generate executive summary from strategic documents for C-suite leadership

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("strategic-plan.pdf");

string executiveQuery = @"Create an executive summary for C-suite leadership. Include:

**Key Decisions Required:**

- List any decisions needing executive approval

**Critical Findings:**

- Top 3-5 most important findings (bullet points)

**Financial Impact:**

- Revenue/cost implications if mentioned

**Risk Assessment:**

- High-priority risks identified

**Recommended Actions:**

- Immediate next steps

Keep under 500 words. Use business language appropriate for board presentation.";

string executiveSummary = await pdf.Query(executiveQuery);

File.WriteAllText("executive-summary.txt", executiveSummary);

Console.WriteLine("Executive summary saved to executive-summary.txt");

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("strategic-plan.pdf")

Dim executiveQuery As String = "Create an executive summary for C-suite leadership. Include:" & vbCrLf & vbCrLf &

"**Key Decisions Required:**" & vbCrLf &

"- List any decisions needing executive approval" & vbCrLf & vbCrLf &

"**Critical Findings:**" & vbCrLf &

"- Top 3-5 most important findings (bullet points)" & vbCrLf & vbCrLf &

"**Financial Impact:**" & vbCrLf &

"- Revenue/cost implications if mentioned" & vbCrLf & vbCrLf &

"**Risk Assessment:**" & vbCrLf &

"- High-priority risks identified" & vbCrLf & vbCrLf &

"**Recommended Actions:**" & vbCrLf &

"- Immediate next steps" & vbCrLf & vbCrLf &

"Keep under 500 words. Use business language appropriate for board presentation."

Dim executiveSummary As String = Await pdf.Query(executiveQuery)

File.WriteAllText("executive-summary.txt", executiveSummary)

Console.WriteLine("Executive summary saved to executive-summary.txt")The resulting executive summary prioritizes actionable information over comprehensive coverage, delivering exactly what decision-makers need without overwhelming detail.

Intelligent Data Extraction

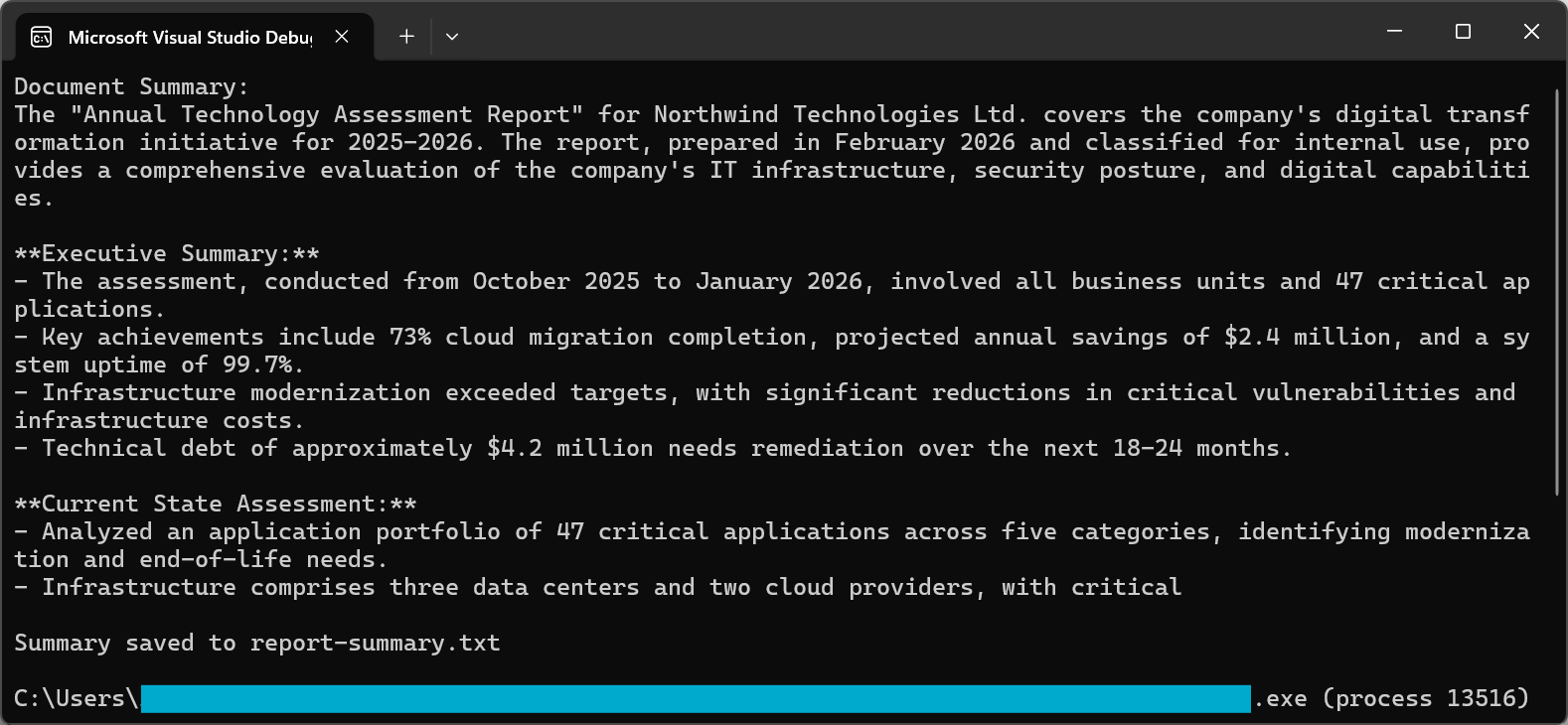

Extracting Structured Data to JSON

One of the most powerful applications of AI-powered PDF processing is extracting structured data from unstructured documents. The key to successful structured extraction in 2026 is using JSON schemas with structured output modes. GPT-5 introduces improved structured outputs, while Claude Sonnet 4.5 offers enhanced tool orchestration for reliable data extraction.

Input

The code calls pdf.Query() with a JSON schema prompt, then uses JsonSerializer.Deserialize() to parse and validate the extracted invoice data.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/extract-invoice-json.cs// Extract structured invoice data as JSON from PDF

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Text.Json;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("sample-invoice.pdf");

// Define JSON schema for extraction

string extractionQuery = @"Extract invoice data and return as JSON with this exact structure:

{

""invoiceNumber"": ""string"",

""invoiceDate"": ""YYYY-MM-DD"",

""dueDate"": ""YYYY-MM-DD"",

""vendor"": {

""name"": ""string"",

""address"": ""string"",

""taxId"": ""string or null""

},

""customer"": {

""name"": ""string"",

""address"": ""string""

},

""lineItems"": [

{

""description"": ""string"",

""quantity"": number,

""unitPrice"": number,

""total"": number

}

],

""subtotal"": number,

""taxRate"": number,

""taxAmount"": number,

""total"": number,

""currency"": ""string""

}

Return ONLY valid JSON, no additional text.";

string jsonResponse = await pdf.Query(extractionQuery);

// Parse and save JSON

try

{

var invoiceData = JsonSerializer.Deserialize<JsonElement>(jsonResponse);

string formattedJson = JsonSerializer.Serialize(invoiceData, new JsonSerializerOptions { WriteIndented = true });

Console.WriteLine("Extracted Invoice Data:");

Console.WriteLine(formattedJson);

File.WriteAllText("invoice-data.json", formattedJson);

}

catch (JsonException)

{

Console.WriteLine("Unable to parse JSON response");

File.WriteAllText("invoice-raw-response.txt", jsonResponse);

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.Text.Json

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("sample-invoice.pdf")

' Define JSON schema for extraction

Dim extractionQuery As String = "Extract invoice data and return as JSON with this exact structure:" & vbCrLf & _

"{" & vbCrLf & _

" ""invoiceNumber"": ""string""," & vbCrLf & _

" ""invoiceDate"": ""YYYY-MM-DD""," & vbCrLf & _

" ""dueDate"": ""YYYY-MM-DD""," & vbCrLf & _

" ""vendor"": {" & vbCrLf & _

" ""name"": ""string""," & vbCrLf & _

" ""address"": ""string""," & vbCrLf & _

" ""taxId"": ""string or null""" & vbCrLf & _

" }," & vbCrLf & _

" ""customer"": {" & vbCrLf & _

" ""name"": ""string""," & vbCrLf & _

" ""address"": ""string""" & vbCrLf & _

" }," & vbCrLf & _

" ""lineItems"": [" & vbCrLf & _

" {" & vbCrLf & _

" ""description"": ""string""," & vbCrLf & _

" ""quantity"": number," & vbCrLf & _

" ""unitPrice"": number," & vbCrLf & _

" ""total"": number" & vbCrLf & _

" }" & vbCrLf & _

" ]," & vbCrLf & _

" ""subtotal"": number," & vbCrLf & _

" ""taxRate"": number," & vbCrLf & _

" ""taxAmount"": number," & vbCrLf & _

" ""total"": number," & vbCrLf & _

" ""currency"": ""string""" & vbCrLf & _

"}" & vbCrLf & _

vbCrLf & _

"Return ONLY valid JSON, no additional text."

Dim jsonResponse As String = Await pdf.Query(extractionQuery)

' Parse and save JSON

Try

Dim invoiceData = JsonSerializer.Deserialize(Of JsonElement)(jsonResponse)

Dim formattedJson As String = JsonSerializer.Serialize(invoiceData, New JsonSerializerOptions With {.WriteIndented = True})

Console.WriteLine("Extracted Invoice Data:")

Console.WriteLine(formattedJson)

File.WriteAllText("invoice-data.json", formattedJson)

Catch ex As JsonException

Console.WriteLine("Unable to parse JSON response")

File.WriteAllText("invoice-raw-response.txt", jsonResponse)

End TryPartial Screenshot of Generated JSON File

Modern AI models in 2026 support structured output modes that guarantee valid JSON responses conforming to provided schemas. This eliminates the need for complex error handling around malformed responses.

Contract Clause Identification

Legal contracts contain specific types of clauses that carry particular importance: termination provisions, liability limitations, indemnification requirements, intellectual property assignments, and confidentiality obligations. AI-powered clause identification automates this analysis while maintaining high accuracy.

This example uses pdf.Query() with a clause-focused JSON schema to extract contract type, parties, critical dates, and individual clauses with risk levels.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/contract-clause-analysis.cs// Analyze contract clauses and identify key terms, risks, and critical dates

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Text.Json;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("contract.pdf");

// Define JSON schema for contract analysis

string clauseQuery = @"Analyze this contract and identify key clauses. Return JSON:

{

""contractType"": ""string"",

""parties"": [""string""],

""effectiveDate"": ""string"",

""clauses"": [

{

""type"": ""Termination|Liability|Indemnification|Confidentiality|IP|Payment|Warranty|Other"",

""title"": ""string"",

""summary"": ""string"",

""riskLevel"": ""Low|Medium|High"",

""keyTerms"": [""string""]

}

],

""criticalDates"": [

{

""description"": ""string"",

""date"": ""string""

}

],

""overallRiskAssessment"": ""Low|Medium|High"",

""recommendations"": [""string""]

}

Focus on: termination rights, liability caps, indemnification, IP ownership, confidentiality, payment terms.

Return ONLY valid JSON.";

string analysisJson = await pdf.Query(clauseQuery);

try

{

var analysis = JsonSerializer.Deserialize<JsonElement>(analysisJson);

string formatted = JsonSerializer.Serialize(analysis, new JsonSerializerOptions { WriteIndented = true });

Console.WriteLine("Contract Clause Analysis:");

Console.WriteLine(formatted);

File.WriteAllText("contract-analysis.json", formatted);

// Display high-risk clauses

Console.WriteLine("\n=== High Risk Clauses ===");

foreach (var clause in analysis.GetProperty("clauses").EnumerateArray())

{

if (clause.GetProperty("riskLevel").GetString() == "High")

{

Console.WriteLine($"- {clause.GetProperty("type")}: {clause.GetProperty("summary")}");

}

}

}

catch (JsonException)

{

Console.WriteLine("Unable to parse contract analysis");

File.WriteAllText("contract-analysis-raw.txt", analysisJson);

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.Text.Json

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("contract.pdf")

' Define JSON schema for contract analysis

Dim clauseQuery As String = "Analyze this contract and identify key clauses. Return JSON:

{

""contractType"": ""string"",

""parties"": [""string""],

""effectiveDate"": ""string"",

""clauses"": [

{

""type"": ""Termination|Liability|Indemnification|Confidentiality|IP|Payment|Warranty|Other"",

""title"": ""string"",

""summary"": ""string"",

""riskLevel"": ""Low|Medium|High"",

""keyTerms"": [""string""]

}

],

""criticalDates"": [

{

""description"": ""string"",

""date"": ""string""

}

],

""overallRiskAssessment"": ""Low|Medium|High"",

""recommendations"": [""string""]

}

Focus on: termination rights, liability caps, indemnification, IP ownership, confidentiality, payment terms.

Return ONLY valid JSON."

Dim analysisJson As String = Await pdf.Query(clauseQuery)

Try

Dim analysis = JsonSerializer.Deserialize(Of JsonElement)(analysisJson)

Dim formatted As String = JsonSerializer.Serialize(analysis, New JsonSerializerOptions With {.WriteIndented = True})

Console.WriteLine("Contract Clause Analysis:")

Console.WriteLine(formatted)

File.WriteAllText("contract-analysis.json", formatted)

' Display high-risk clauses

Console.WriteLine(vbCrLf & "=== High Risk Clauses ===")

For Each clause In analysis.GetProperty("clauses").EnumerateArray()

If clause.GetProperty("riskLevel").GetString() = "High" Then

Console.WriteLine($"- {clause.GetProperty("type")}: {clause.GetProperty("summary")}")

End If

Next

Catch ex As JsonException

Console.WriteLine("Unable to parse contract analysis")

File.WriteAllText("contract-analysis-raw.txt", analysisJson)

End TryThis capability transforms contract review from a sequential, manual process into an automated, scalable workflow. Legal teams can quickly identify high-risk provisions across hundreds of contracts.

Financial Data Parsing

Financial documents contain critical quantitative data embedded in complex narratives and tables. AI-powered parsing excels at financial documents because it understands context—distinguishing between historical results and forward projections, identifying whether numbers are in thousands or millions, and understanding relationships between different metrics.

The code uses pdf.Query() with a financial JSON schema to extract income statement data, balance sheet metrics, and forward guidance into structured output.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/financial-data-extraction.cs// Extract financial metrics from annual reports and earnings documents

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Text.Json;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("annual-report.pdf");

// Define JSON schema for financial extraction (numbers in millions)

string financialQuery = @"Extract financial metrics from this document. Return JSON:

{

""reportPeriod"": ""string"",

""company"": ""string"",

""currency"": ""string"",

""incomeStatement"": {

""revenue"": number,

""costOfRevenue"": number,

""grossProfit"": number,

""operatingExpenses"": number,

""operatingIncome"": number,

""netIncome"": number,

""eps"": number

},

""balanceSheet"": {

""totalAssets"": number,

""totalLiabilities"": number,

""shareholdersEquity"": number,

""cash"": number,

""totalDebt"": number

},

""keyMetrics"": {

""revenueGrowthYoY"": ""string"",

""grossMargin"": ""string"",

""operatingMargin"": ""string"",

""netMargin"": ""string"",

""debtToEquity"": number

},

""guidance"": {

""nextQuarterRevenue"": ""string"",

""fullYearRevenue"": ""string"",

""notes"": ""string""

}

}

Use null for unavailable data. Numbers in millions unless stated.

Return ONLY valid JSON.";

string financialJson = await pdf.Query(financialQuery);

try

{

var financials = JsonSerializer.Deserialize<JsonElement>(financialJson);

string formatted = JsonSerializer.Serialize(financials, new JsonSerializerOptions { WriteIndented = true });

Console.WriteLine("Extracted Financial Data:");

Console.WriteLine(formatted);

File.WriteAllText("financial-data.json", formatted);

}

catch (JsonException)

{

Console.WriteLine("Unable to parse financial data");

File.WriteAllText("financial-raw.txt", financialJson);

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.Text.Json

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("annual-report.pdf")

' Define JSON schema for financial extraction (numbers in millions)

Dim financialQuery As String = "Extract financial metrics from this document. Return JSON:

{

""reportPeriod"": ""string"",

""company"": ""string"",

""currency"": ""string"",

""incomeStatement"": {

""revenue"": number,

""costOfRevenue"": number,

""grossProfit"": number,

""operatingExpenses"": number,

""operatingIncome"": number,

""netIncome"": number,

""eps"": number

},

""balanceSheet"": {

""totalAssets"": number,

""totalLiabilities"": number,

""shareholdersEquity"": number,

""cash"": number,

""totalDebt"": number

},

""keyMetrics"": {

""revenueGrowthYoY"": ""string"",

""grossMargin"": ""string"",

""operatingMargin"": ""string"",

""netMargin"": ""string"",

""debtToEquity"": number

},

""guidance"": {

""nextQuarterRevenue"": ""string"",

""fullYearRevenue"": ""string"",

""notes"": ""string""

}

}

Use null for unavailable data. Numbers in millions unless stated.

Return ONLY valid JSON."

Dim financialJson As String = Await pdf.Query(financialQuery)

Try

Dim financials = JsonSerializer.Deserialize(Of JsonElement)(financialJson)

Dim formatted As String = JsonSerializer.Serialize(financials, New JsonSerializerOptions With {.WriteIndented = True})

Console.WriteLine("Extracted Financial Data:")

Console.WriteLine(formatted)

File.WriteAllText("financial-data.json", formatted)

Catch ex As JsonException

Console.WriteLine("Unable to parse financial data")

File.WriteAllText("financial-raw.txt", financialJson)

End TryThe extracted structured data can feed directly into financial models, time-series databases, or analytics platforms, enabling automated tracking of metrics across reporting periods.

Custom Extraction Prompts

Many organizations have unique extraction requirements based on their specific domain, document formats, or business processes. IronPDF's AI integration fully supports custom extraction prompts, allowing you to define exactly what information should be extracted and how it should be structured.

This example demonstrates pdf.Query() with a research-focused schema extracting methodology, key findings with confidence levels, and limitations from academic papers.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/custom-research-extraction.cs// Extract structured research metadata from academic papers

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Text.Json;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("research-paper.pdf");

// Define JSON schema for research paper extraction

string researchQuery = @"Extract structured information from this research paper. Return JSON:

{

""title"": ""string"",

""authors"": [""string""],

""institution"": ""string"",

""publicationDate"": ""string"",

""abstract"": ""string"",

""researchQuestion"": ""string"",

""methodology"": {

""type"": ""Quantitative|Qualitative|Mixed Methods"",

""approach"": ""string"",

""sampleSize"": ""string"",

""dataCollection"": ""string""

},

""keyFindings"": [

{

""finding"": ""string"",

""significance"": ""string"",

""confidence"": ""High|Medium|Low""

}

],

""limitations"": [""string""],

""futureWork"": [""string""],

""keywords"": [""string""]

}

Focus on extracting verifiable claims and noting uncertainty.

Return ONLY valid JSON.";

string extractionResult = await pdf.Query(researchQuery);

try

{

var research = JsonSerializer.Deserialize<JsonElement>(extractionResult);

string formatted = JsonSerializer.Serialize(research, new JsonSerializerOptions { WriteIndented = true });

Console.WriteLine("Research Paper Extraction:");

Console.WriteLine(formatted);

File.WriteAllText("research-extraction.json", formatted);

// Display key findings with confidence levels

Console.WriteLine("\n=== Key Findings ===");

foreach (var finding in research.GetProperty("keyFindings").EnumerateArray())

{

string confidence = finding.GetProperty("confidence").GetString() ?? "Unknown";

Console.WriteLine($"[{confidence}] {finding.GetProperty("finding")}");

}

}

catch (JsonException)

{

Console.WriteLine("Unable to parse research extraction");

File.WriteAllText("research-raw.txt", extractionResult);

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.Text.Json

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("research-paper.pdf")

' Define JSON schema for research paper extraction

Dim researchQuery As String = "Extract structured information from this research paper. Return JSON:

{

""title"": ""string"",

""authors"": [""string""],

""institution"": ""string"",

""publicationDate"": ""string"",

""abstract"": ""string"",

""researchQuestion"": ""string"",

""methodology"": {

""type"": ""Quantitative|Qualitative|Mixed Methods"",

""approach"": ""string"",

""sampleSize"": ""string"",

""dataCollection"": ""string""

},

""keyFindings"": [

{

""finding"": ""string"",

""significance"": ""string"",

""confidence"": ""High|Medium|Low""

}

],

""limitations"": [""string""],

""futureWork"": [""string""],

""keywords"": [""string""]

}

Focus on extracting verifiable claims and noting uncertainty.

Return ONLY valid JSON."

Dim extractionResult As String = Await pdf.Query(researchQuery)

Try

Dim research = JsonSerializer.Deserialize(Of JsonElement)(extractionResult)

Dim formatted As String = JsonSerializer.Serialize(research, New JsonSerializerOptions With {.WriteIndented = True})

Console.WriteLine("Research Paper Extraction:")

Console.WriteLine(formatted)

File.WriteAllText("research-extraction.json", formatted)

' Display key findings with confidence levels

Console.WriteLine(vbCrLf & "=== Key Findings ===")

For Each finding In research.GetProperty("keyFindings").EnumerateArray()

Dim confidence As String = finding.GetProperty("confidence").GetString() OrElse "Unknown"

Console.WriteLine($"[{confidence}] {finding.GetProperty("finding")}")

Next

Catch ex As JsonException

Console.WriteLine("Unable to parse research extraction")

File.WriteAllText("research-raw.txt", extractionResult)

End TryCustom prompts transform AI-powered extraction from a generic tool into a specialized solution tailored to your specific needs.

Question-Answering Over Documents

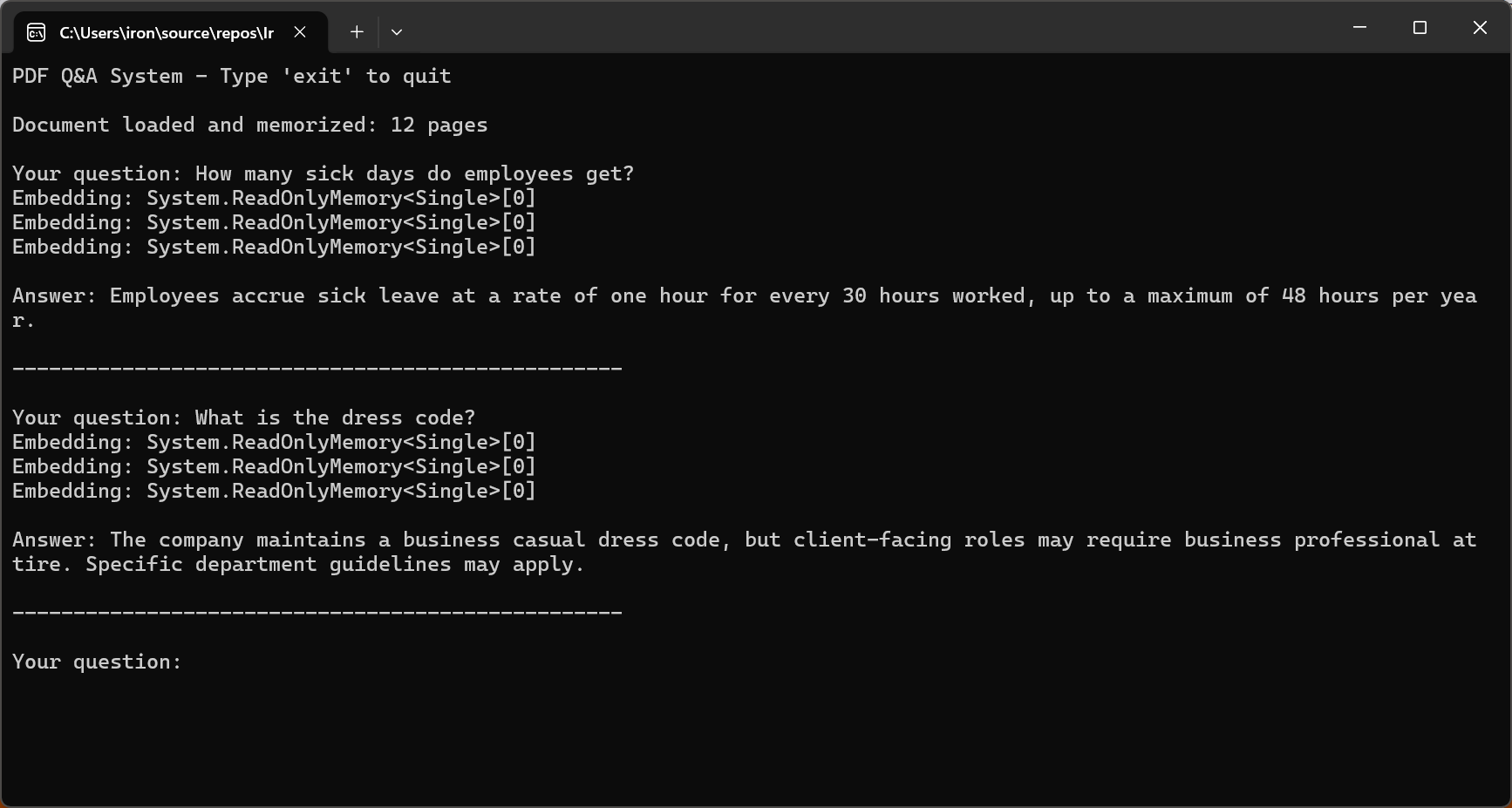

Building a PDF Q&A System

Question-answering systems enable users to interact with PDF documents conversationally, asking questions in natural language and receiving accurate, contextual answers. The basic pattern involves extracting text from the PDF, combining it with the user's question in a prompt, and requesting an answer from the AI.

Input

The code calls pdf.Memorize() to index the document for semantic search, then enters an interactive loop using pdf.Query() to answer user questions.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/pdf-question-answering.cs// Interactive Q&A system for querying PDF documents

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("sample-legal-document.pdf");

// Memorize document to enable persistent querying

await pdf.Memorize();

Console.WriteLine("PDF Q&A System - Type 'exit' to quit\n");

Console.WriteLine($"Document loaded and memorized: {pdf.PageCount} pages\n");

// Interactive Q&A loop

while (true)

{

Console.Write("Your question: ");

string? question = Console.ReadLine();

if (string.IsNullOrWhiteSpace(question) || question.ToLower() == "exit")

break;

string answer = await pdf.Query(question);

Console.WriteLine($"\nAnswer: {answer}\n");

Console.WriteLine(new string('-', 50) + "\n");

}

Console.WriteLine("Q&A session ended.");

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("sample-legal-document.pdf")

' Memorize document to enable persistent querying

Await pdf.Memorize()

Console.WriteLine("PDF Q&A System - Type 'exit' to quit" & vbCrLf)

Console.WriteLine($"Document loaded and memorized: {pdf.PageCount} pages" & vbCrLf)

' Interactive Q&A loop

While True

Console.Write("Your question: ")

Dim question As String = Console.ReadLine()

If String.IsNullOrWhiteSpace(question) OrElse question.ToLower() = "exit" Then

Exit While

End If

Dim answer As String = Await pdf.Query(question)

Console.WriteLine($"{vbCrLf}Answer: {answer}{vbCrLf}")

Console.WriteLine(New String("-"c, 50) & vbCrLf)

End While

Console.WriteLine("Q&A session ended.")Console Output

The key to effective Q&A in 2026 is constraining the AI to answer based solely on document content. GPT-5's "safe completions" training method and Claude Sonnet 4.5's improved alignment substantially reduce hallucination rates.

Chunking Long Documents for Context Windows

Most real-world documents exceed AI context windows. Effective chunking strategies are essential for processing these documents. Chunking involves dividing documents into segments small enough to fit within context windows while preserving semantic coherence.

This code iterates through pdf.Pages, creating DocumentChunk objects with configurable maxChunkTokens and overlapTokens for context continuity.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/semantic-document-chunking.cs// Split long documents into overlapping chunks for RAG systems

using IronPdf;

var pdf = PdfDocument.FromFile("long-document.pdf");

// Chunking configuration

int maxChunkTokens = 4000; // Leave room for prompts and responses

int overlapTokens = 200; // Overlap for context continuity

int approxCharsPerToken = 4; // Rough estimate for tokenization

int maxChunkChars = maxChunkTokens * approxCharsPerToken;

int overlapChars = overlapTokens * approxCharsPerToken;

var chunks = new List<DocumentChunk>();

var currentChunk = new System.Text.StringBuilder();

int chunkStartPage = 1;

int currentPage = 1;

for (int i = 0; i < pdf.PageCount; i++)

{

string pageText = pdf.Pages[i].Text;

currentPage = i + 1;

if (currentChunk.Length + pageText.Length > maxChunkChars && currentChunk.Length > 0)

{

chunks.Add(new DocumentChunk

{

Text = currentChunk.ToString(),

StartPage = chunkStartPage,

EndPage = currentPage - 1,

ChunkIndex = chunks.Count

});

// Create overlap with previous chunk for continuity

string overlap = currentChunk.Length > overlapChars

? currentChunk.ToString().Substring(currentChunk.Length - overlapChars)

: currentChunk.ToString();

currentChunk.Clear();

currentChunk.Append(overlap);

chunkStartPage = currentPage - 1;

}

currentChunk.AppendLine($"\n--- Page {currentPage} ---\n");

currentChunk.Append(pageText);

}

if (currentChunk.Length > 0)

{

chunks.Add(new DocumentChunk

{

Text = currentChunk.ToString(),

StartPage = chunkStartPage,

EndPage = currentPage,

ChunkIndex = chunks.Count

});

}

Console.WriteLine($"Document chunked into {chunks.Count} segments");

foreach (var chunk in chunks)

{

Console.WriteLine($" Chunk {chunk.ChunkIndex + 1}: Pages {chunk.StartPage}-{chunk.EndPage} ({chunk.Text.Length} chars)");

}

// Save chunk metadata for RAG indexing

File.WriteAllText("chunks-metadata.json", System.Text.Json.JsonSerializer.Serialize(

chunks.Select(c => new { c.ChunkIndex, c.StartPage, c.EndPage, Length = c.Text.Length }),

new System.Text.Json.JsonSerializerOptions { WriteIndented = true }

));

public class DocumentChunk

{

public string Text { get; set; } = "";

public int StartPage { get; set; }

public int EndPage { get; set; }

public int ChunkIndex { get; set; }

}

Imports IronPdf

Imports System.Text

Imports System.Text.Json

Imports System.IO

' Split long documents into overlapping chunks for RAG systems

Dim pdf = PdfDocument.FromFile("long-document.pdf")

' Chunking configuration

Dim maxChunkTokens As Integer = 4000 ' Leave room for prompts and responses

Dim overlapTokens As Integer = 200 ' Overlap for context continuity

Dim approxCharsPerToken As Integer = 4 ' Rough estimate for tokenization

Dim maxChunkChars As Integer = maxChunkTokens * approxCharsPerToken

Dim overlapChars As Integer = overlapTokens * approxCharsPerToken

Dim chunks As New List(Of DocumentChunk)()

Dim currentChunk As New StringBuilder()

Dim chunkStartPage As Integer = 1

Dim currentPage As Integer = 1

For i As Integer = 0 To pdf.PageCount - 1

Dim pageText As String = pdf.Pages(i).Text

currentPage = i + 1

If currentChunk.Length + pageText.Length > maxChunkChars AndAlso currentChunk.Length > 0 Then

chunks.Add(New DocumentChunk With {

.Text = currentChunk.ToString(),

.StartPage = chunkStartPage,

.EndPage = currentPage - 1,

.ChunkIndex = chunks.Count

})

' Create overlap with previous chunk for continuity

Dim overlap As String = If(currentChunk.Length > overlapChars,

currentChunk.ToString().Substring(currentChunk.Length - overlapChars),

currentChunk.ToString())

currentChunk.Clear()

currentChunk.Append(overlap)

chunkStartPage = currentPage - 1

End If

currentChunk.AppendLine(vbCrLf & "--- Page " & currentPage & " ---" & vbCrLf)

currentChunk.Append(pageText)

Next

If currentChunk.Length > 0 Then

chunks.Add(New DocumentChunk With {

.Text = currentChunk.ToString(),

.StartPage = chunkStartPage,

.EndPage = currentPage,

.ChunkIndex = chunks.Count

})

End If

Console.WriteLine($"Document chunked into {chunks.Count} segments")

For Each chunk In chunks

Console.WriteLine($" Chunk {chunk.ChunkIndex + 1}: Pages {chunk.StartPage}-{chunk.EndPage} ({chunk.Text.Length} chars)")

Next

' Save chunk metadata for RAG indexing

File.WriteAllText("chunks-metadata.json", JsonSerializer.Serialize(

chunks.Select(Function(c) New With {Key .ChunkIndex = c.ChunkIndex, Key .StartPage = c.StartPage, Key .EndPage = c.EndPage, Key .Length = c.Text.Length}),

New JsonSerializerOptions With {.WriteIndented = True}

))

Public Class DocumentChunk

Public Property Text As String = ""

Public Property StartPage As Integer

Public Property EndPage As Integer

Public Property ChunkIndex As Integer

End Class

Overlapping chunks provide continuity across boundaries, ensuring the AI has sufficient context even when relevant information spans chunk boundaries.

RAG (Retrieval-Augmented Generation) Patterns

Retrieval-Augmented Generation represents a powerful pattern for AI-powered document analysis in 2026. Rather than feeding entire documents to the AI, RAG systems first retrieve only the relevant portions for a given query, then use those portions as context for generating answers.

The RAG workflow has three main phases: document preparation (chunking and creating embeddings), retrieval (searching for relevant chunks), and generation (using retrieved chunks as context for AI responses).

The code indexes multiple PDFs by calling pdf.Memorize() on each, then uses pdf.Query() to retrieve answers from the combined document memory.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/rag-system-implementation.cs// Retrieval-Augmented Generation (RAG) system for querying across multiple indexed documents

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

// Index all documents in folder

string[] documentPaths = Directory.GetFiles("documents/", "*.pdf");

Console.WriteLine($"Indexing {documentPaths.Length} documents...\n");

// Memorize each document (creates embeddings for retrieval)

foreach (string path in documentPaths)

{

var pdf = PdfDocument.FromFile(path);

await pdf.Memorize();

Console.WriteLine($"Indexed: {Path.GetFileName(path)} ({pdf.PageCount} pages)");

}

Console.WriteLine("\n=== RAG System Ready ===\n");

// Query across all indexed documents

string query = "What are the key compliance requirements for data retention?";

Console.WriteLine($"Query: {query}\n");

var searchPdf = PdfDocument.FromFile(documentPaths[0]);

string answer = await searchPdf.Query(query);

Console.WriteLine($"Answer: {answer}");

// Interactive query loop

Console.WriteLine("\n--- Enter questions (type 'exit' to quit) ---\n");

while (true)

{

Console.Write("Question: ");

string? userQuery = Console.ReadLine();

if (string.IsNullOrWhiteSpace(userQuery) || userQuery.ToLower() == "exit")

break;

string response = await searchPdf.Query(userQuery);

Console.WriteLine($"\nAnswer: {response}\n");

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.IO

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

' Index all documents in folder

Dim documentPaths As String() = Directory.GetFiles("documents/", "*.pdf")

Console.WriteLine($"Indexing {documentPaths.Length} documents..." & vbCrLf)

' Memorize each document (creates embeddings for retrieval)

For Each path As String In documentPaths

Dim pdf = PdfDocument.FromFile(path)

Await pdf.Memorize()

Console.WriteLine($"Indexed: {Path.GetFileName(path)} ({pdf.PageCount} pages)")

Next

Console.WriteLine(vbCrLf & "=== RAG System Ready ===" & vbCrLf)

' Query across all indexed documents

Dim query As String = "What are the key compliance requirements for data retention?"

Console.WriteLine($"Query: {query}" & vbCrLf)

Dim searchPdf = PdfDocument.FromFile(documentPaths(0))

Dim answer As String = Await searchPdf.Query(query)

Console.WriteLine($"Answer: {answer}")

' Interactive query loop

Console.WriteLine(vbCrLf & "--- Enter questions (type 'exit' to quit) ---" & vbCrLf)

While True

Console.Write("Question: ")

Dim userQuery As String = Console.ReadLine()

If String.IsNullOrWhiteSpace(userQuery) OrElse userQuery.ToLower() = "exit" Then

Exit While

End If

Dim response As String = Await searchPdf.Query(userQuery)

Console.WriteLine(vbCrLf & $"Answer: {response}" & vbCrLf)

End WhileRAG systems excel at handling large document collections—legal case databases, technical documentation libraries, research archives. By retrieving only relevant portions, they maintain response quality while scaling to effectively unlimited document sizes.

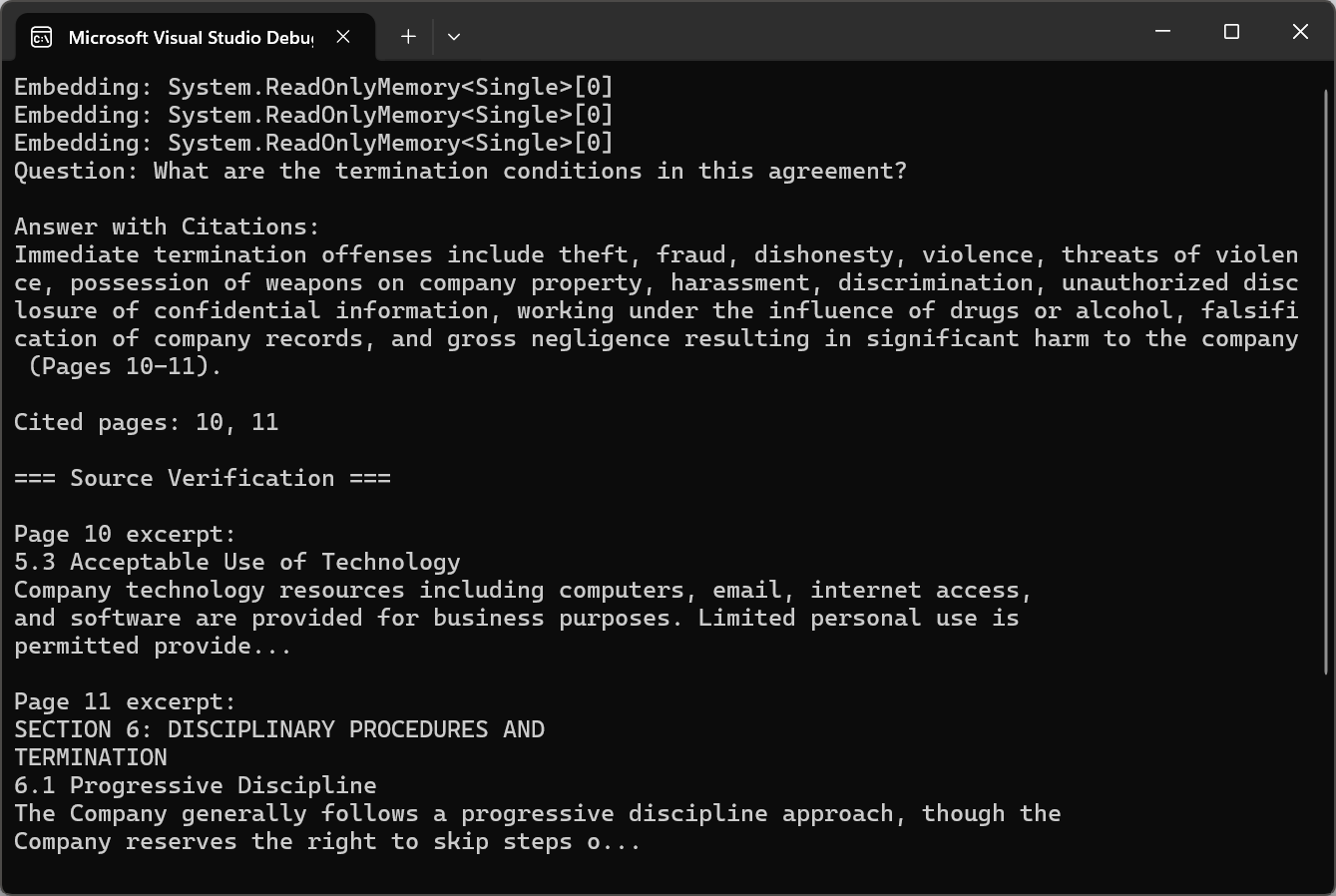

Citing Sources from PDF Pages

For professional applications, AI answers must be verifiable. The citation approach involves maintaining metadata about chunk origins during chunking and retrieval. Each chunk stores not just text content but also its source page numbers, section headers, and position in the document.

Input

The code uses pdf.Query() with citation instructions, then calls ExtractCitedPages() with regex to parse page references and verify sources using pdf.Pages[pageNum - 1].Text.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/answer-with-citations.cs// Answer questions with page citations and source verification

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Text.RegularExpressions;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

var pdf = PdfDocument.FromFile("sample-legal-document.pdf");

await pdf.Memorize();

string question = "What are the termination conditions in this agreement?";

// Request citations in query

string citationQuery = $@"{question}

IMPORTANT: Include specific page citations in your answer using the format (Page X) or (Pages X-Y).

Only cite information that appears in the document.";

string answerWithCitations = await pdf.Query(citationQuery);

Console.WriteLine("Question: " + question);

Console.WriteLine("\nAnswer with Citations:");

Console.WriteLine(answerWithCitations);

// Extract cited page numbers using regex

var citedPages = ExtractCitedPages(answerWithCitations);

Console.WriteLine($"\nCited pages: {string.Join(", ", citedPages)}");

// Verify citations with page excerpts

Console.WriteLine("\n=== Source Verification ===");

foreach (int pageNum in citedPages.Take(3))

{

if (pageNum <= pdf.PageCount && pageNum > 0)

{

string pageText = pdf.Pages[pageNum - 1].Text;

string excerpt = pageText.Length > 200 ? pageText.Substring(0, 200) + "..." : pageText;

Console.WriteLine($"\nPage {pageNum} excerpt:\n{excerpt}");

}

}

// Extract page numbers from citation format (Page X) or (Pages X-Y)

List<int> ExtractCitedPages(string text)

{

var pages = new HashSet<int>();

var matches = Regex.Matches(text, @"\(Pages?\s*(\d+)(?:\s*-\s*(\d+))?\)", RegexOptions.IgnoreCase);

foreach (Match match in matches)

{

int startPage = int.Parse(match.Groups[1].Value);

pages.Add(startPage);

if (match.Groups[2].Success)

{

int endPage = int.Parse(match.Groups[2].Value);

for (int p = startPage; p <= endPage; p++)

pages.Add(p);

}

}

return pages.OrderBy(p => p).ToList();

}

Imports IronPdf

Imports IronPdf.AI

Imports Microsoft.SemanticKernel

Imports Microsoft.SemanticKernel.Memory

Imports Microsoft.SemanticKernel.Connectors.OpenAI

Imports System.Text.RegularExpressions

' Azure OpenAI configuration

Dim azureEndpoint As String = "https://your-resource.openai.azure.com/"

Dim apiKey As String = "your-azure-api-key"

Dim chatDeployment As String = "gpt-4o"

Dim embeddingDeployment As String = "text-embedding-ada-002"

' Initialize Semantic Kernel

Dim kernel = Kernel.CreateBuilder() _

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey) _

.Build()

Dim memory = New MemoryBuilder() _

.WithMemoryStore(New VolatileMemoryStore()) _

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey) _

.Build()

IronDocumentAI.Initialize(kernel, memory)

Dim pdf = PdfDocument.FromFile("sample-legal-document.pdf")

Await pdf.Memorize()

Dim question As String = "What are the termination conditions in this agreement?"

' Request citations in query

Dim citationQuery As String = $"{question}

IMPORTANT: Include specific page citations in your answer using the format (Page X) or (Pages X-Y).

Only cite information that appears in the document."

Dim answerWithCitations As String = Await pdf.Query(citationQuery)

Console.WriteLine("Question: " & question)

Console.WriteLine(vbCrLf & "Answer with Citations:")

Console.WriteLine(answerWithCitations)

' Extract cited page numbers using regex

Dim citedPages = ExtractCitedPages(answerWithCitations)

Console.WriteLine(vbCrLf & "Cited pages: " & String.Join(", ", citedPages))

' Verify citations with page excerpts

Console.WriteLine(vbCrLf & "=== Source Verification ===")

For Each pageNum As Integer In citedPages.Take(3)

If pageNum <= pdf.PageCount AndAlso pageNum > 0 Then

Dim pageText As String = pdf.Pages(pageNum - 1).Text

Dim excerpt As String = If(pageText.Length > 200, pageText.Substring(0, 200) & "...", pageText)

Console.WriteLine(vbCrLf & "Page " & pageNum & " excerpt:" & vbCrLf & excerpt)

End If

Next

' Extract page numbers from citation format (Page X) or (Pages X-Y)

Function ExtractCitedPages(text As String) As List(Of Integer)

Dim pages = New HashSet(Of Integer)()

Dim matches = Regex.Matches(text, "\((Pages?)\s*(\d+)(?:\s*-\s*(\d+))?\)", RegexOptions.IgnoreCase)

For Each match As Match In matches

Dim startPage As Integer = Integer.Parse(match.Groups(2).Value)

pages.Add(startPage)

If match.Groups(3).Success Then

Dim endPage As Integer = Integer.Parse(match.Groups(3).Value)

For p As Integer = startPage To endPage

pages.Add(p)

Next

End If

Next

Return pages.OrderBy(Function(p) p).ToList()

End FunctionConsole Output

Citations transform AI-generated answers from opaque outputs into transparent, verifiable information. Users can review source material to validate answers and build confidence in AI-assisted analysis.

Batch AI Processing

Processing Document Libraries at Scale

Enterprise document processing often involves thousands or millions of PDFs. The foundation of scalable batch processing is parallelization. IronPDF is thread-safe, allowing concurrent PDF processing without interference.

This code uses SemaphoreSlim with configurable maxConcurrency to process PDFs in parallel, calling pdf.Summarize() on each while tracking results in a ConcurrentBag.

:path=/static-assets/pdf/content-code-examples/tutorials/ai-powered-pdf-processing-csharp/batch-document-processing.cs// Process multiple documents in parallel with rate limiting

using IronPdf;

using IronPdf.AI;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using System.Collections.Concurrent;

using System.Text;

// Azure OpenAI configuration

string azureEndpoint = "https://your-resource.openai.azure.com/";

string apiKey = "your-azure-api-key";

string chatDeployment = "gpt-4o";

string embeddingDeployment = "text-embedding-ada-002";

// Initialize Semantic Kernel

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.AddAzureOpenAIChatCompletion(chatDeployment, azureEndpoint, apiKey)

.Build();

var memory = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(embeddingDeployment, azureEndpoint, apiKey)

.Build();

IronDocumentAI.Initialize(kernel, memory);

// Configure parallel processing with rate limiting

int maxConcurrency = 3;

string inputFolder = "documents/";

string outputFolder = "summaries/";

Directory.CreateDirectory(outputFolder);

string[] pdfFiles = Directory.GetFiles(inputFolder, "*.pdf");

Console.WriteLine($"Processing {pdfFiles.Length} documents...\n");

var results = new ConcurrentBag<ProcessingResult>();

var semaphore = new SemaphoreSlim(maxConcurrency);

var tasks = pdfFiles.Select(async filePath =>

{

await semaphore.WaitAsync();

var result = new ProcessingResult { FilePath = filePath };

try

{

var stopwatch = System.Diagnostics.Stopwatch.StartNew();

var pdf = PdfDocument.FromFile(filePath);

string summary = await pdf.Summarize();

string outputPath = Path.Combine(outputFolder,

Path.GetFileNameWithoutExtension(filePath) + "-summary.txt");

await File.WriteAllTextAsync(outputPath, summary);

stopwatch.Stop();

result.Success = true;

result.ProcessingTime = stopwatch.Elapsed;

result.OutputPath = outputPath;

Console.WriteLine($"[OK] {Path.GetFileName(filePath)} ({stopwatch.ElapsedMilliseconds}ms)");

}

catch (Exception ex)

{

result.Success = false;

result.ErrorMessage = ex.Message;

Console.WriteLine($"[ERROR] {Path.GetFileName(filePath)}: {ex.Message}");

}

finally

{

semaphore.Release();

results.Add(result);

}

}).ToArray();

await Task.WhenAll(tasks);

// Generate processing report

var successful = results.Where(r => r.Success).ToList();

var failed = results.Where(r => !r.Success).ToList();

var report = new StringBuilder();

report.AppendLine("=== Batch Processing Report ===");

report.AppendLine($"Successful: {successful.Count}");

report.AppendLine($"Failed: {failed.Count}");

if (successful.Any())

{

var avgTime = TimeSpan.FromMilliseconds(successful.Average(r => r.ProcessingTime.TotalMilliseconds));

report.AppendLine($"Average processing time: {avgTime.TotalSeconds:F1}s");

}

if (failed.Any())

{

report.AppendLine("\nFailed documents:");

foreach (var fail in failed)